Automated Weekly AI Newsletter

Published on 15.10.2025

During one of happyhotel's Dev Offsites, AI trends dominated our team discussions. An open space session introduced me to n8n, an AI workflow automation framework. The idea struck me immediately: instead of struggling to keep up with the flood of AI research, why not build a system that filters and curates it for me?

The Technical Stack

N8n offers both cloud-hosted and self-hosted options. Since I was building a lightweight workflow and wanted to avoid subscription costs, I opted for the self-hosted version deployed via Docker on my server.

Initially, I attempted to access the n8n UI through an Apache reverse proxy to enable SSL encryption. However, n8n proved surprisingly restrictive with reverse proxy configurations, and I found myself losing too much time troubleshooting. I pivoted to a simpler approach: accessing the UI without SSL and using a cronjob to start the Docker container only when needed, either for the weekly workflow execution or manual configuration.

This decision taught me something valuable: pragmatism matters. For personal projects, shipping a working solution beats endlessly optimizing for theoretical best practices.

Building the Workflow

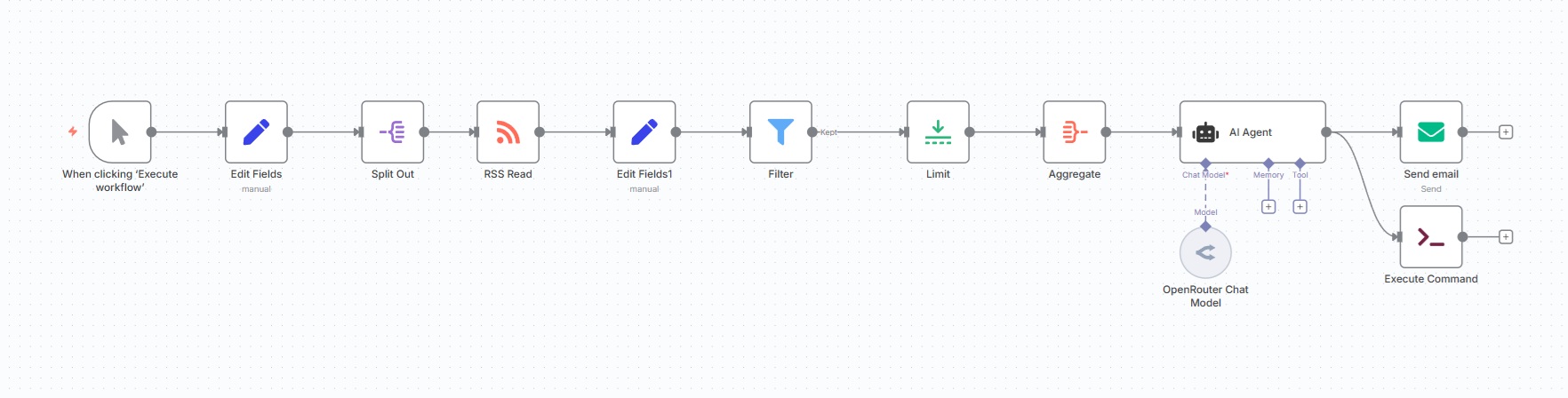

With the infrastructure running smoothly, I dove into n8n's workflow ecosystem. After studying various examples, I assembled my own pipeline:

- Feed Aggregation: The workflow fetches content from specified RSS feeds

- AI Processing: An AI agent analyzes the aggregated articles using carefully crafted prompts

- Delivery: The curated content is formatted in HTML and sent via email, simultaneously updating my website's project page

The Curation Criteria

The prompt engineering became the most crucial and challenging part of this project. I wanted a specific mix of content:

- 6-8 articles on AI applications in ecological and environmental fields

- 2-3 articles on general AI research breakthroughs

- 1-2 articles on major tool or model releases

- 0-2 articles on educational posts covering important techniques or frameworks

- 0-1 article on significant industry news

The Hallucination Problem

The biggest technical hurdle? The AI agent kept hallucinating AI involvement in articles that merely mentioned environmental technology. A robotics article about soft sensors became "AI-powered navigation systems." A water quality monitoring project suddenly involved "machine learning analysis", despite no mention of ML in the source.

This forced me to dedicate a significant portion of my prompt to verification requirements. The agent now must explicitly identify and quote the exact sentences proving AI/ML/DL usage before selecting any environmental article. If it can't cite specific techniques. like neural networks, computer vision, transformers, reinforcement learning, the article gets excluded, no matter how relevant it seems.

Counterintuitively, I learned that telling an LLM not to do something often backfires. Instead of saying "don't fabricate AI usage," I had to build a positive verification framework: "you MUST cite the specific sentence proving AI is used."

Output Formatting

The HTML formatting presented its own challenge. Since I'm not comfortable with HTML styling, I had AI generate the template. Through iterative refinement, I arrived at a clean, readable format with proper spacing and typography.

Title generation required fine-tuning through few-shot prompting. Early versions produced generic titles like "AI for Climate Monitoring" or "New AI Breakthrough in Species Detection." I taught the agent to lead with specific techniques instead: "CNNs Detect Deforestation in Satellite Data" or "Transformer-Based Species Classification."

Key Takeaways

This project delivered several unexpected lessons:

- Reverse proxy complexity: Sometimes the pragmatic solution (cronjob-triggered container) beats the theoretically better one (always-on SSL setup)

- Prompt engineering is debugging: Iteratively testing and refining prompts mirrors traditional software debugging

- Negative instructions fail: LLMs respond better to "do this verification" than "don't hallucinate"

- Specificity matters: Vague titles and summaries indicate the AI is working from inference rather than facts

Try It Yourself

If you'd like to build your own tailored newsletter, you can set up an n8n instance and import my workflow JSON from GitHub. Just modify the RSS feed Urls, the credentials and adjust the selection criteria to match your interests.

The beauty of this system is its modularity: swap the environmental AI focus for cybersecurity, web development, or any other domain. The verification framework I built for environmental articles can be adapted to catch hallucinations in any technical field.

The Prompt: A Deep Dive

The prompt evolved through dozens of iterations to handle the hallucination problem. Below is the final version. Click to expand if you're interested in the technical details.

View Full Prompt (Click to Expand)

# AI Newsletter Curation Agent

## **Mission Context**

You are a **curation agent** responsible for assembling a **weekly AI newsletter** tailored to **graduate-level AI/ML researchers in Germany**, with a **focus on environmental and ecological AI applications**.

Your task:

From the provided JSON dataset of articles, **select exactly 10 articles** (or fewer if the quality threshold is not met) that meet strict **AI verification**, **technical relevance**, and **ecological application** criteria.

Only ever output the provided HTML Formatting. No other explaining text from your side.

---

## **Processing Overview**

You will proceed in the following stages:

1. **Initial Tagging:**

For *every* article, select exactly **three descriptive words** summarizing its content.

→ Proceed **only** if at least one of these words relates directly to AI or ML.

2. **AI/ML Verification (Critical):**

For every candidate article, confirm explicit use of **AI, ML, or DL** techniques.

Cite the **exact sentence(s)** from the source text that prove AI/ML/DL usage.

- No inference or speculation is allowed.

- No vague or future-use statements count as verification.

- Explicit terminology is required.

3. **Tiered Selection:**

Prioritize environmental AI first (Tier 1), then research and tool releases (Tier 2).

Apply weighted criteria and exclusion filters strictly.

4. **Output Construction:**

For each selected article, generate a **technical title** (≤50 characters) and a **concise summary (3–4 sentences)** in the required HTML format.

---

## **Stage 1: AI/ML Verification Protocol**

**For each article, you MUST verify:**

### Inclusion Rules

An article **qualifies** if it explicitly mentions:

- AI, Machine Learning (ML), or Deep Learning (DL)

- Specific techniques (e.g., CNNs, RNNs, Transformers, GANs, diffusion models)

- Model training, inference, or evaluation steps

- Explicit references to data-driven modeling or algorithmic learning methods

**You must quote at least one exact sentence** (or line) proving this.

### Exclusion Rules

Immediately exclude if:

- No explicit mention of AI/ML/DL terms or techniques

- Only mentions “smart,” “automated,” “intelligent,” “optimized,” etc. without ML context

- Mentions “potential use of AI” or “future AI integration”

- Refers to robotics, sensors, or monitoring without describing AI-based control or analysis

- Focuses on infrastructure, business, or deployment (not research/technical content)

**Example (Reject):**

> “The system monitors air quality using smart sensors.”

>

>

> → No ML technique mentioned → Exclude.

>

**Example (Include):**

> “The model uses convolutional neural networks to classify plant species.”

>

>

> → Explicit CNN mention → Include.

>

---

## **Stage 2: Tiered Selection Criteria**

### **TIER 1 (6–8 articles): Environmental & Ecological AI**

**Core Inclusion Topics:**

- AI for climate modeling, carbon tracking, biodiversity, pollution mitigation

- ML for renewable energy forecasting or optimization

- DL for remote sensing or satellite-based ecological assessment

- Reinforcement learning for resource allocation or environmental management

- AI methods evaluating sustainability or reducing computational footprint (“Green AI”)

**Requirements:**

- Must include a verifiable AI/ML/DL method (with quote)

- Must have measurable environmental relevance

- Prioritize peer-reviewed or research-lab sources

**Hard Exclusions:**

- Environmental studies with no AI usage

- Robotics/sensing projects without ML control or perception

- Chemistry/materials science articles with no data-driven modeling

- Speculative or conceptual AI proposals

---

### **TIER 1B (2–3 articles): Foundational AI Research**

Include groundbreaking AI research relevant to ecological applications or general ML advancement:

- New architectures, training paradigms, optimization breakthroughs

- Efficiency improvements (e.g., sparse training, quantization, distillation)

- Research with open-source code or reproducible results

Exclude:

- Minor parameter tweaks, incremental papers, or derivative work.

---

### **TIER 2 (2–4 articles): Broader AI Developments**

**Possible categories:**

1. **Major Model or Tool Releases (1–2)**

- Major open-source foundation models or frameworks (e.g., new LLMs, multimodal AI)

- Exclude incremental version updates or cloud service announcements.

2. **Technical Education (0–2)**

- Tutorials or explainers of advanced architectures (e.g., diffusion models, graph neural networks)

- Must demonstrate technical rigor beyond beginner content.

3. **Significant Industry News (0–1)**

- Include only if it reflects a meaningful technical or research milestone.

---

## **Deduplication and Quality Assurance**

When multiple articles describe the same story, prefer:

1. Original research paper or institutional release

2. Technical blog or reproducible notebook

3. High-quality technical journalism

Exclude duplicates or derivative summaries.

---

## **Stage 3: Output Construction**

For each selected article, provide the following structured information:

1. **URL:** Extracted directly from the JSON `url` field

2. **Title (≤50 chars):**

- Must reference a specific model, technique, or task

- Avoid generic phrasing (“AI for...”)

- Prefer technical and informative phrasing

- Examples:

GOOD: “CNNs Detect Forest Degradation”

GOOD: “Diffusion Models Forecast Climate Trends”

BAD: “AI for Forest Health Monitoring”

BAD: “AI Revolutionizes Ecology”

3. **Summary (3–4 sentences, 60–80 words):**

- **Sentence 1:** Describe specific ML technique(s) used

- **Sentence 2:** Explain methodological contribution or novelty

- **Sentence 3:** Quantify outcomes or environmental impact

- **Sentence 4:** Explain relevance to AI researchers (innovation, reproducibility, etc.)

**Language style:**

- Formal academic tone

- Use precise technical terms

- Avoid marketing adjectives

- Write for ML-aware readers

---

## **Stage 4: Output Format**

Output **only valid HTML** in the following structure:

```html

<ul style="list-style-type: none; padding: 0; margin: 0;">

<li style="margin-bottom: 24px; padding-bottom: 16px; border-bottom: 1px solid #e0e0e0;">

<strong><a href="[EXACT_URL_FROM_JSON]" style="color: #1a73e8; text-decoration: none; font-size: 16px;">[Technical Title Max 50 Chars]</a></strong><br>

<span style="color: #5f6368; font-size: 14px; line-height: 1.6;">[3-4 sentence technical summary...]</span>

</li>

<!-- Repeat for each article -->

</ul>

```

**No introductory or concluding text.**

---

## **Final Validation Checklist**

**Relevance & Balance**

- 6–8 environmental AI articles

- 2–4 research/tool/education articles

**Verification**

- Each article has an explicit AI/ML/DL mention

- Exact sentence(s) quoted during verification step

**Content Integrity**

- No duplicates or near-duplicates

- No speculative or fabricated claims

- No “AI for” generic phrasing in titles

- Each summary is specific and reproducible

**Output Format**

- Valid HTML only

- All URLs match JSON input

- Titles ≤ 50 characters

- Summaries 60–80 words

## Input Data

Process the following JSON data:

{{JSON.stringify($json.data)}}

Want to see the newsletter in action? The latest curated edition is automatically published below every Saturday.